9 Core Rendering Engine Techniques Used in 3D Model Design

Whichever 3D modeling or design program you choose to use, when the time comes to generate the final graphic output, the rendering process is one of the most important steps. The rendering engine that usually comes with most modeling software has a variety of different processes used to complete a scene. Keep reading to find out more about each of these techniques and why some are so processor heavy.

9 Core Rendering Engine Techniques

1.Shading

Shading is the process of producing levels of light, darkness, and color onto objects in a rendered image. When a scene has a light source, be it a lamp, sun object, or emission material, shaders can be employed to process a myriad of special graphic effects. You can augment the position and color of all pixels on an object, including more complex operations like hue, saturation, and brightness.

Your 3D modeling program will also have the options of flat shading and smooth shading. Flat shading is a type of shading that is evaluated only once for each polygon or vertex of an object or model’s surface, resulting in the object’s corners appearing sharp. Smooth shading evaluates shading from pixel to pixel, making a seemingly smooth transition between the polygons of an object.

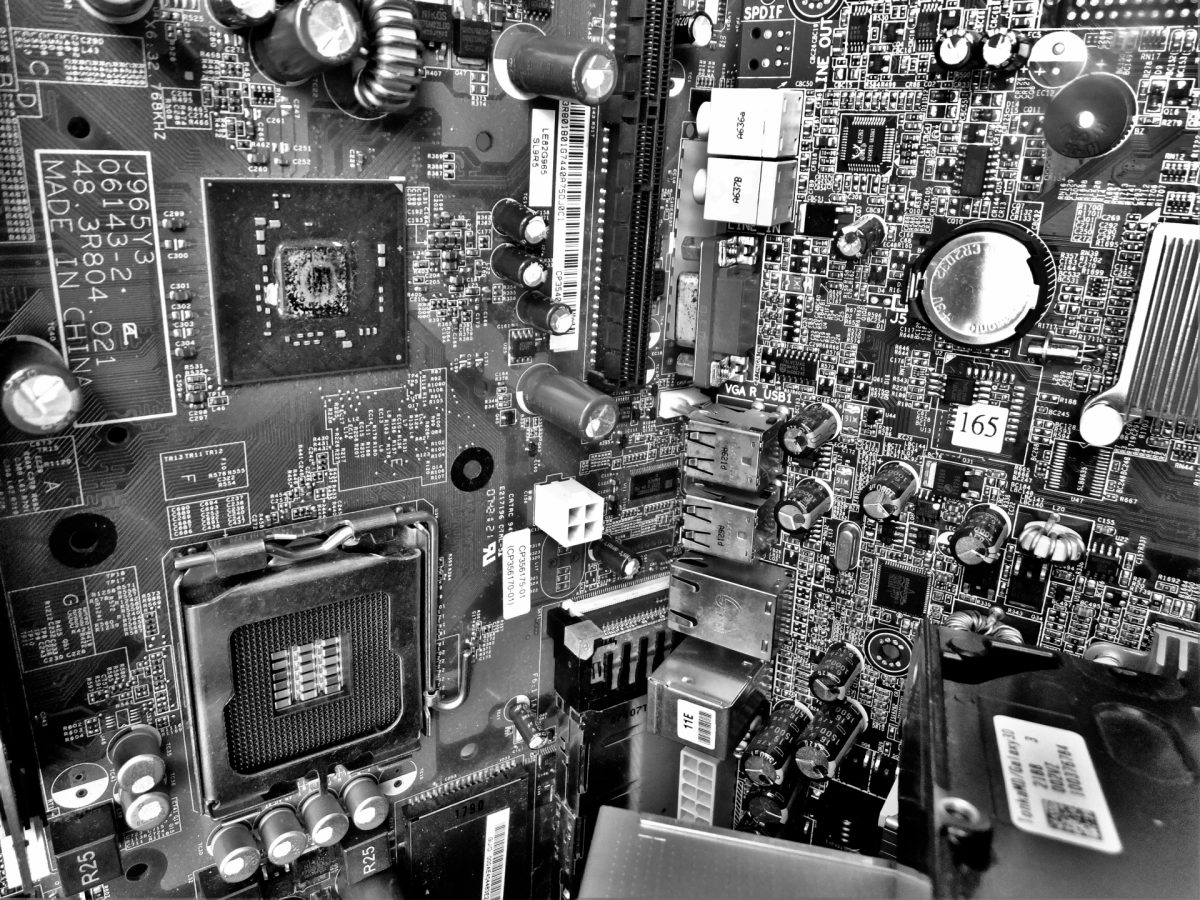

Other types of specific shaders include vertex shaders, primitive shaders, and geometry shaders, which perform specific tasks allowing for different effects and visuals. Though very impressive, shaders are processor-intensive operations. Even on simply lit models, they are written to apply to objects no matter what coordinates or position they may be in within a scene or environment. Shaders can easily bog down a CPU and slow it to a crawl if consistently used, so shader operations are now usually regulated to the GPU, allowing for more control when modifying materials or textures.

Shaders are used mostly for VFX post-processing in film and television, CGI imagery, and video game design, and are usually the first step when trying to make a scene or an object appear believably real or manifesting jaw-dropping visual effects.

2.Ray Tracing

Ray tracing is the technique of rendering a scene’s imagery by tracing the paths of a light source, or rays, as pixels while simulating the behaviors of natural light and its properties when interacting with other objects. This technique attempts to mimic real-world light properties using processes like diffusion, reflection, shallow depth of field, and soft shadows. The end result is a stunning simulated visual with breathtaking photorealism.

The popularity in 3D software that utilize ray tracing-based rendering has grown over the years as its realism is second to none compared with other light-based algorithms, and difficult to recreate with rendering methods such as scanline rendering. Its main drawback is performance. Depending on a scene’s complexity, the ray tracing algorithm can be tremendously taxing on your workstation, especially if used for real-time rendering.

Scanline rendering relies on the number of pixels on screen in a single scene whereas ray tracing relies on each ray of light (no matter where it sits in a scene) and renders each of them and its reflections, or bounces, individually. When put to its maximum settings, a render can give way to almost true-to-life photorealism. However, this is usually made impossible for most consumer workstations without a render farm due to the computation power necessary.

That said, it’s an extremely adjustable process and its settings can be updated and altered in real time, using tools like path tracing and refraction that limit the amount of processing power needed to render each light path. If you have the time and patience, ray tracing can help you generate beautiful CGI and VFX for film and television. It’s a bit less suited for real-time applications such as gaming and VR, for now.

3.Ray Casting

Once used interchangeably with ray tracing, this technique performs very different functions and serves a distinctively different purpose. Ray casting is a basic algorithm that allows for rendering three-dimensional spaces without recursive light tracing that simulates real-world light properties. This means it eliminates rendering processes like reflections, refractions, and shadow falloff, allowing for high-speed, real-time applications like 3D video games and VR rendering.

With clever use of texture and bump mapping, ray casting-based rendering can allow for quick and simple real-time rendering of solid planar and non-planar models. This technique was regularly used in early 3D video games like Doom and Wolfenstein 3D.

Ray Tracing vs. Ray Casting

Natural light rays are emitted until they are met with a surface, which may reflect, refract, or absorb the rays. If reflected, the light ray bounces in one or many directions leading to more light rays. If absorbed, the light’s intensity decreases. If the surface is transparent or translucent, the surface refracts the light into itself and potentially even changes the color of the surface. Ray tracing relies on these processes while ray casting does not.

4.Refraction

Refraction is a technique that allows light rays to bend on transparent or translucent surfaces when rendering. As a light ray interacts with a transparent material, it will offset the ray’s path inside the material before exiting the other side. Because this algorithm is taken into consideration when employing ray tracing, it can still be utilized and customized independently in the rendering settings of your 3D software.

5.Texture Mapping

Texture mapping is the process of applying high-quality details, surface texture, or color information to a 3D model. “Texture maps” can be created using digitally painted, photographed, or manipulated images from 2D photo manipulation software like Photoshop, Illustrator, and GIMP or materials painted directly onto 3D surfaces using advanced painting software like Substance Painter or ZBrush. These maps can then be wrapped to UV coordinates of a 3D model and applied or “mapped” to them, projecting the image map onto the model. A simplified analogy of this is to think of the model as a Christmas present and the texture map as colorful wrapping paper.

There are several types of rasterization (vector-to-pixel transformation) techniques for this process, allowing for different results depending on what you’re looking for:

- Forward texture mapping

- Affine mapping

- Multitexturing

- Inverse mapping

It’s also possible to apply a similar type of mapping with light paths called render mapping, or “baking.” This technique takes complex light paths or rays, renders them to a high resolution, and then repurposes them as surface textures to be UV mapped onto a model or object at a later time.

This process is regularly used in video game development to reduce polygon counts and rendering costs, allowing for more real-time application and utilization.

6.Bump Mapping

Somewhat similar to texture mapping, bump mapping is the process of simulating bumps, protuberances, and displacements on the surface of a 3D model. The intent is to further give the illusion of realism by simulating erosion, volume, or damage to the surface geometry of an object.

The resulting effect is only cosmetic once rendered. The underlying surface geometry is not modified in any way, only appearing to have been. This processed result, or “surface normal,” is then recalculated to interact with light, reflection, and shadow correctly, giving the illusion of detail where there is none.

Bump mapping is extremely useful and not processor-intensive, making the result quick to produce. The only drawback is that realism is lost when reflective surfaces are involved in a scene. The surface normal of a model, not the surface geometry of the model itself, is augmented. Therefore, shadows and silhouettes will not reflect the displacement of the surface normal on other surfaces. A simple solution to this limitation is to use displacement mapping, where a height map can be applied directly to the surface geometry of a 3D model.

7.Volumetric Lighting (Fog, Smoke, and Steam)

In 3D rendering software, volumetric lighting is a very useful process that allows for effects utilizing light as an object to create assets like fog, smoke, or steam. This technique allows for enhancing the lighting and distance lighting effects in a rendered scene by using transparent objects that aren’t rendered with surface geometry but as a container of point vertices or “volume.” Sunbeams bursting through a window is a standard example of volumetric lighting while warm or steaming ground after a rainstorm could be an example of fog.

8.Depth of Field

When your scene is complete and you are ready to render, the next object you will need to place in your scene’s field is a camera. Your camera’s settings, depending on your software, is very customizable — sometimes, right down to the type of lens you wish to use. Another customizable function is the camera’s depth of field as well as the environment of a scene’s depth of field.

Depth of field, or DOF, is the distance between the closest and farthest objects in a scene that are in sharp focus. This algorithm is based on your camera’s focal length, subject distance, and lens aperture. All of which are fully programmable and modifiable.

Usually for renders, it’s more for an artistic effect than a technical achievement and is meant to visually impress.

9.Motion Blur

Motion blur is another feature for your software’s internal camera. This process is the frame smearing of moving objects in a rendered scene, usually used for film VFX and animation. With a real camera, this effect is much easier to create using long exposures and shutter speed. With CGI, it’s a bit more complicated. When rendering in 3D software, frames are not taken in long exposure shots and your camera has an incredibly swift shutter speed that cannot imitate the effect made by real-world materials on its own. One way this effect can be simulated is by applying geometry shaders to objects that perform geometry extrusion when the camera is moved in a scene.

This process simulates lifelike smearing as seen in film and photography and is used to great effect in video games and animation.

Simplify Heavy Rendering with and Online Render Farm

These 9 rendering techniques are just a taste of what lies within the average rendering engine in your 3D modeling software. Once you have a better understanding of these features, functions, and processes and what they have to offer, you will be able to recognize the full potential of your software and maximize your creative output.

It’s important to remember that the more tools and techniques you incorporate into your 3D models, the more power that is necessary to render them. Utilizing an affordable render farm, can drastically reduce the processing power and time necessary to bring your project to life. If you’re ready to render, Render Pool’s cloud-based service makes it easy to upload and receive your finalized media in minutes. For more information, head over to our How To page or calculate the cost of rendering your project with our Price Estimate Calculator. Happy rendering!